DevOps Engineers are responsible for deploying Helm charts for Kubernetes applications on different environments including AWS. However, after deploying helm charts, we need to manually add DNS records in the AWS Route 53 service to start the application. Adding such records manually is tedious and we can automate this process using ExternalDNS.

What is ExternalDNS?

ExternalDNS is Kubernetes cluster internal DNS server. ExternalDNS allows you to control DNS records dynamically using the Kubernetes services and Ingresses with DNS providers for e.g., AWS Route 53 service.

What is a HelmFile?

HelmFile allows you to declare the definition of all Kubernetes clusters in a single YAML file by bundling multiple helm releases i.e., multiple helm charts in a single YAML file. It keeps a directory of chart value files with version control. It also allows you to build a release specification depending on the type of environment (develop, test, production). More information about the helmfile can be found here.

Deploy External DNS on Kubernetes Cluster Using Helmfile (AWS EKS)

Prerequisites:

Create an IAM policy and Role which allows Kubernetes pods to assume the AWS IAM roles.

This policy allows ExternalDNS to update the Route53 Resource Record Sets and Hosted Zones. You can customize this policy as per your requirement.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"route53:ChangeResourceRecordSets"

],

"Resource": [

"arn:aws:route53:::hostedzone/*"

]

},

{

"Effect": "Allow",

"Action": [

"route53:ListHostedZones",

"route53:ListResourceRecordSets"

],

"Resource": [

"*"

]

}

]

}

Then, create an IAM Role which is assumed by the ExternalDNS Pod. After creating the role, please attach the IAM Policy to the IAM role created earlier.

Please refer to the appropriate documentation if you are using kiam or Kube2iam.

Deploy ExternalDNS on AWS EKS using helmfile

Helm chart for ExternalDNS is available on Github. We are modifying the chart and converting it into a helmfile.

Create the helmfile named as externaldns.yaml

In the podAnnotations section, add the IAM role created earlier.

# helmfile -f externaldns.yaml diff

repositories:

- name: external-dns

url: https://kubernetes-sigs.github.io/external-dns/

releases:

- name: external-dns

namespace: kube-system

chart: external-dns/external-dns

version: 1.7.0

values:

- podAnnotations:

iam.amazonaws.com/role: “<IAM role ARN>”

wait: true

timeout: 120

You can modify values.yaml in the helmchart according to your requirements by adding parameters for ExternalDNS,

sources:

- – service

- – ingress

domain-filter: test.devops.com # will make ExternalDNS see only the hosted zones matching provided domain, omit to process all available hosted zones.

provider: aws

policy: upsert-only # would prevent ExternalDNS from deleting any records, omit to enable full synchronization.

aws-zone-type:public # public or private hosted zones.

Run or test the syntax using diff command,

helmfile -f externaldns.yaml diff

If satisfied with the diff, run a sync or apply,

helmfile -f externaldns.yaml apply

Once deployed check if the pod is running,

kubectl get pods -n kube-system

Deploy a Kubernetes RabbitMQ application using helm file

In this example, we will deploy a RabbitMQ application and see how ExternalDNS works with ALB ingress objects. Once deployed it will dynamically add DNS records based on the hostname and ALB ingress.

The Helm chart for RabbitMQ is available on Github. We are modifying the chart and converting it into a helmfile.

Create the helmfile named rabbitmq.yaml

# helmfile -f rabbitmq.yaml diff

repositories:

- name: bitnami

url: https://charts.bitnami.com/bitnami

releases:

- name: rabbitmq

namespace: devops

chart: bitnami/rabbitmq

version: 8.24.12

values:

- auth:

username: “rabbitmq”

password: “rabbitmq”

erlangCookie: “ajhdkhhihkds”

persistence:

enabled: true

service:

type: NodePort

ingress:

enabled: true

path: /*

hostname: rabbitmq.devops.test.com

annotations:

kubernetes.io/ingress.class: alb

external-dns.alpha.kubernetes.io/hostname: rabbitmq.devops.test.com

alb.ingress.kubernetes.io/actions.response-404: |

{"Type":"fixed-response","FixedResponseConfig":{"ContentType":"text/plain","StatusCode":"404","MessageBody":"devops - 404 Page not found"}}

alb.ingress.kubernetes.io/actions.ssl-redirect: '{"Type": "redirect", "RedirectConfig": { "Protocol": "HTTPS", "Port": "443", "StatusCode": "HTTP_301"}}'

alb.ingress.kubernetes.io/certificate-arn: “arn:aws:acm:us-west-2:738379792:certificate/97394d-72255-6fd1b-a59e-79b9a80w42451”

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}]'

alb.ingress.kubernetes.io/load-balancer-attributes: access_logs.s3.enabled=true, access_logs.s3.bucket=devops-elb-logs,access_logs.s3.prefix=devops

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/security-groups: sg-08043e2c21117694234

alb.ingress.kubernetes.io/subnets: subnet-b5f64bff,subnet-431e40,subnet-564e90,subnet-58863b8

alb.ingress.kubernetes.io/group.name: devops

wait: true

timeout: 600

In podAnnotations please add external DNS annotation external-dns.alpha.kubernetes.io/hostname to create a record for the resulting ALB.

The above Kubernetes pod will create an (IPv4) ALB in AWS which will forward traffic to the RabbitMQ application.

Run or test the syntax using diff command:-

helmfile -f rabbitmq.yaml diff

If satisfied with the diff, run a sync or apply.

helmfile -f rabbitmq.yaml apply

Once it is deployed check the pod is running.

kubectl get pods

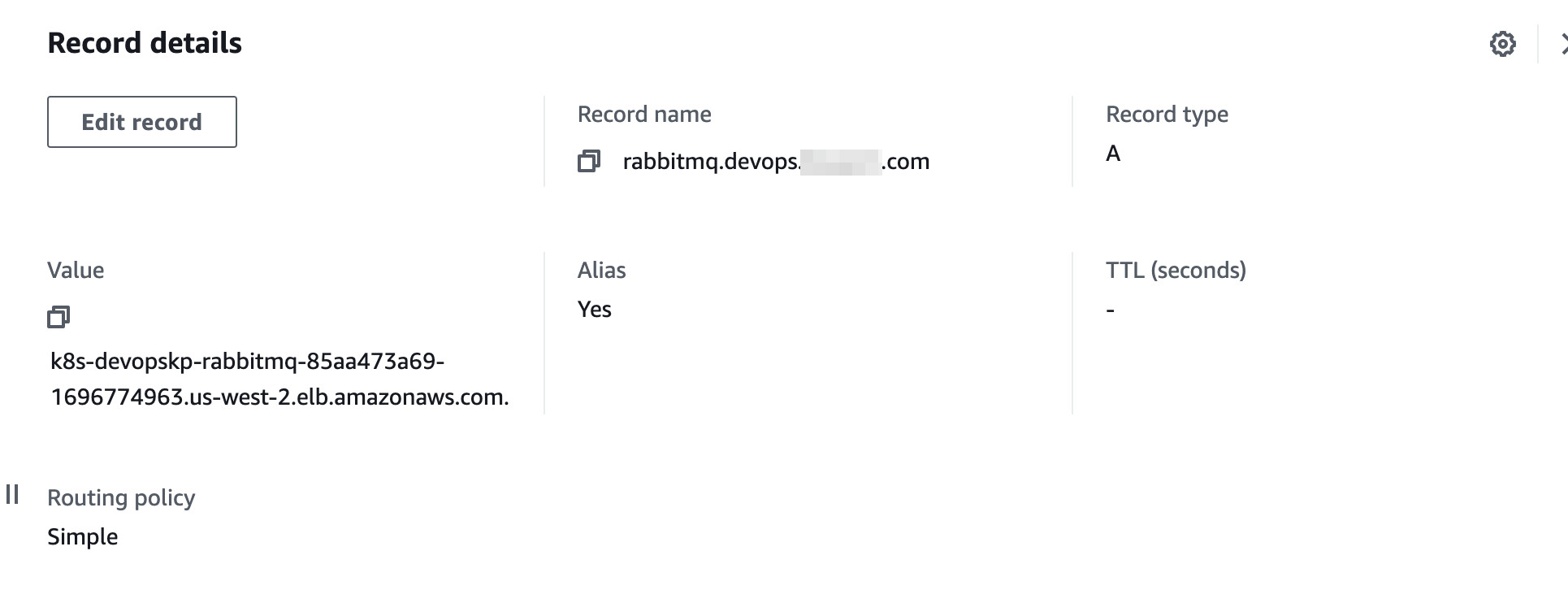

If the source is ingress, then ExternalDNS will create DNS records based on the hosts specified in the ingress objects. The above example will result in one alias record being created i.e rabbitmq.devops.test.com which is the alias of ALB that is associated with the ingress object.

About the author

Suraj Kamble is a DevOps engineer at Excellarate. He has expertise in tools and technologies such as Docker, Kubernetes, terraform, Jenkins, CloudFormation, Gitlab and Bitbucket CICD, Sumologic, Datadog, Grafana and Prometheus. Suraj holds a bachelor’s degree in computer engineering from the Savitribai Phule Pune University.